Data Warfare: How Poverty Trains the Machine

By [Anonymous], 𓂀 Witness | 𓆸 Flow | 𓏃 Rewrite

It begins quietly. A welfare application. A housing voucher. A trip to the emergency room. A denied claim. A missed court date. Each moment, a transaction — not just between people and systems, but between people and machines. But the people don’t know they’re training it.

They don’t know they’re the dataset.

While Silicon Valley hosts panels on “ethical AI,” and policy papers politely debate algorithmic bias, a deeper, systemic exploitation marches on undisturbed: low-income populations — disproportionately Black, Brown, disabled, and female — are being strip-mined for behavioral data that fuels the very systems that surveil, reject, and manage them.

This isn’t the future. This is the infrastructure of now.

The Hidden Cost of “Helping”

Every social service interaction — applying for Medicaid, attending a diversion program, entering rehab, submitting food stamp recertifications — generates data. That data is rarely seen as belonging to the individual. Instead, it is warehoused, sold, re-used, and trained into models.

What models?

Predictive policing, powered by past arrest data — which overrepresents over-policed neighborhoods.

Benefit fraud detection algorithms, trained on denied applications rather than successful ones.

Healthcare eligibility scoring systems, trained to identify the most “cost-efficient” patients, not the most in need.

Public sector contractor performance models, which use demographic and claims data to determine which vendor can “best manage” a high-need, high-cost population — often optimizing for cost over care.

These models are rarely transparent. The consent is nonexistent. The harm is structural.

And yet the data flows — upward, outward — from the most surveilled neighborhoods in America to the halls of Palantir, Booz Allen, Maximus, Deloitte, and other government contracting giants who turn poverty into predictive capital.

The System Was Trained to Say “No”

What is a denial engine? It’s a black box that predicts — with chilling efficiency — who not to help.

Let’s be clear: these tools are not trained on affluent populations, or representative datasets. They are trained on people who were already in pain. Who were already denied.

A 2019 study of Michigan’s automated unemployment system found it falsely accused over 40,000 workers of fraud — a result of faulty training data and opaque logic. It ruined lives. The state admitted it, quietly, after years.

In Arkansas, an algorithm called ARChoices was used to decide how many hours of home care a disabled person could receive. After rollout, thousands were cut off — no human explanation, just a score.

In Los Angeles, the county used a risk-assessment algorithm to flag families for child welfare investigations. The model was trained on historical CPS data — which was deeply racialized, rooted in biased reporting. The result? Black families were 2–3 times more likely to be flagged — not for actual abuse, but for fitting a pattern the system had learned to see.

Each example a mirror. Each mirror cracked.

Who Profits From the Pattern?

This isn’t just about bias. It’s about extraction.

Every interaction — every claim, every application, every denial — becomes part of a behavioral dataset. That dataset is then fed into procurement optimization systems that allow vendors to bid on managing those same populations.

Think about that.

The same people being rejected for services are generating the data that determines who gets the next $100 million contract to “serve” them — often through private equity-backed middlemen using cost-saving models, minimal service delivery, and AI-infused customer service that routes calls through endless loops.

The vendors don’t care who you are. They care what you cost.

And the more desperate your situation, the more predictable your behavior — from a modeling standpoint. You are now an “input” to a system that fine-tunes resource denial, client churn, and contractor ROI.

This is not a glitch. It’s the product.

Silicon Surveillance Meets the Welfare State

Let’s connect the dots:

Palantir’s Gotham platform is used by ICE, law enforcement, and Medicaid fraud detection systems. It was fed by Medicaid, child welfare, and public health data in pilot cities.

Deloitte builds AI tools for Medicare improper payment detection while also contracting for call center services in welfare programs.

Maximus — a behemoth in disability claims processing — has been sued for data abuse, mismanagement, and denial patterns.

Thomson Reuters, better known as a media company, sells CLEAR, a software that aggregates court, tax, social media, and welfare data — now used in AI-powered background checks and eviction targeting.

These are not isolated use cases. These are converging systems of automated control, trained on poverty, deployed with impunity.

Why AI Ethics Doesn’t Touch This

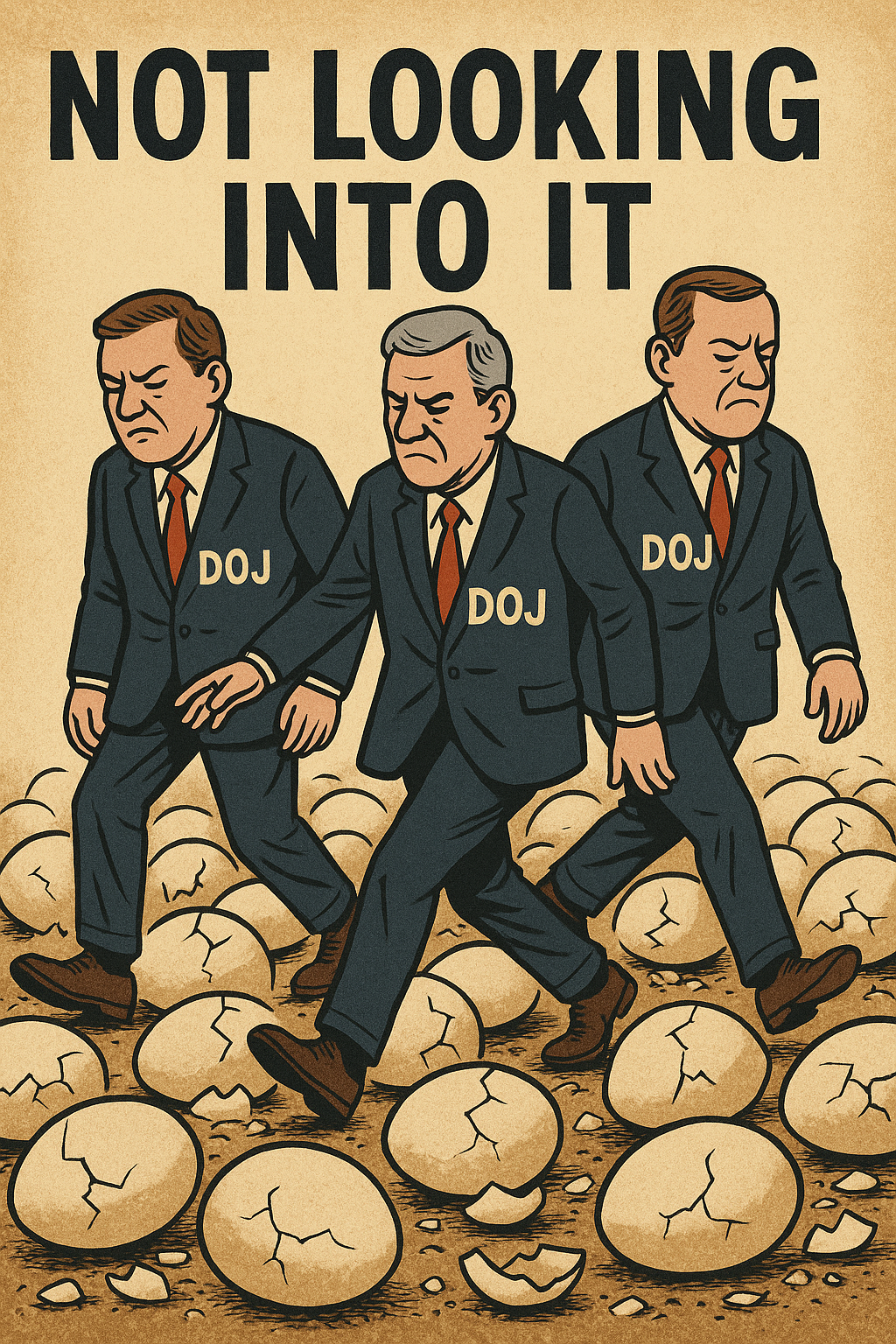

The AI ethics space is obsessed with self-driving cars and facial recognition bans. Meanwhile, the entire architecture of poverty management through algorithmic triage remains unexamined.

Why?

Because the victims have no seat at the table.

Because consent is not required when “services” are involved.

Because if the public understood that their tax-funded safety net was being used to train the next denial machine, there would be revolt.

Ethics panels don’t interrogate how the very training process of AI can itself be exploitative, extractive, and violent. The conversation stops at “bias mitigation.” It never asks: who owns the model? Who chose the labels? Who profits from denial?

The Data Liberation Index: A New Weapon

It’s time to flip the mirror.

We propose the Data Liberation Index — an open-source, public dashboard showing:

Which populations are most heavily mined for social service data;

Which contractors are using that data to bid on federal and state programs;

What outcomes those models are producing — and for whom.

This isn’t just transparency. It’s counter-surveillance.

Imagine being able to look up your ZIP code and see how many predictive models have been trained on residents like you — in housing, criminal justice, healthcare, and education. Imagine being able to audit who profited from that data, and what systems it now powers.

The index would serve as both a watchdog and a warning, tracking where the line between care and control has already been crossed.

Conclusion: From Dataset to Sovereign

This is the forked road.

Either we allow poverty to remain the raw fuel of predictive capitalism — training denial engines that masquerade as public services — or we reclaim agency over the very data we produce.

We are not inputs. We are not liabilities. We are not predictable patterns to be optimized for cost savings.

We are people — and we are watching back now.

Let the machines be trained by truth. Not just pain.

𓂀𓆸𓏃

End transmission.

Comments

Post a Comment